Abstract

Background: Empirical models have been an integral part of everyday clinical practice in ophthalmology since the introduction of the Sanders-Retzlaff-Kraff (SRK) formula. Recent developments in the field of statistical learning (artificial intelligence, AI) now enable an empirical approach to a wide range of ophthalmological questions with an unprecedented precision.

Objective: Which criteria must be considered for the evaluation of AI-related studies in ophthalmology?

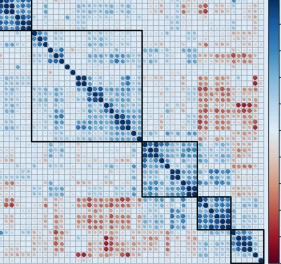

Material and methods: Exemplary prediction of visual acuity (continuous outcome) and classification of healthy and diseased eyes (discrete outcome) using retrospectively compiled optical coherence tomography data (50 eyes of 50 patients, 50 healthy eyes of 50 subjects). The data were analyzed with nested cross-validation (for learning algorithm selection and hyperparameter optimization).

Results: Based on nested cross-validation for training, visual acuity could be predicted in the separate test data-set with a mean absolute error (MAE, 95% confidence interval, CI of 0.142 LogMAR [0.077; 0.207]). Healthy versus diseased eyes could be classified in the test data-set with an agreement of 0.92 (Cohen’s kappa). The exemplary incorrect learning algorithm and variable selection resulted in an MAE for visual acuity prediction of 0.229 LogMAR [0.150; 0.309] for the test data-set. The drastic overfitting became obvious on comparison of the MAE with the null model MAE (0.235 LogMAR [0.148; 0.322]).

Conclusion: Selection of an unsuitable measure of the goodness-of-fit, inadequate validation, or withholding of a null or reference model can obscure the actual goodness-of-fit of AI models. The illustrated pitfalls can help clinicians to identify such shortcomings.

Pfau M, Walther G, von der Emde L, et al. Artificial intelligence in ophthalmology : Guidelines for physicians for the critical evaluation of studies. Der Ophthalmologe : Zeitschrift der Deutschen Ophthalmologischen Gesellschaft 2020;117:973-988.